Today, confidence in data quality is critically important as higher education leaders must respond to various political and economic pressures. Colleges and universities are also under the microscope to ensure fiscal responsibility while best serving students. Now more than ever, higher-ed Finance teams need trusted, accurate and timely data to make the right decisions for each school.

University boards are also asking institutions to provide more transparency and accountability. This request means having a clear strategy for ongoing robust data quality management is more important than ever. Why? Better decisions create better outcomes. And high-quality data gives institutions the confidence in data to get decisions right – which, in turn, fuels better student support, more accurate results and reduced risk.

Why Is Data Quality Important?

Research has shown that data quality matters. According to Ventana, almost nine in 10 organizations (89%) reported data-quality issues as an impactful or very impactful barrier for their organization. The impacts cause a lack of trust in the data and wasted resource time in financial and operational processes.

In fact, many institutions are looking to be more data driven. According to The Chronicle of Higher Education, 97% of college administrators surveyed believe that higher education needs to better use data and analytics to make strategic decisions.

As institutions get more complex, the need to focus on data quality grows too. As the adage goes, “garbage in, garbage out,” (see Figure 1) so creating a solid foundation of governed data is imperative for data-driven decision-making. Having one source of trusted data across the institution that can be referenced is imperative to align teams.

What’s Preventing Good Data Quality?

In many cases, institutions are up against the restrictions of disconnected Finance processes, legacy systems and inefficient analytics tools. This red tape has fueled a lack of agility, cumbersome processes and siloed decision-making. Simply put, the solutions in place are not built to work together and thus make data quality management difficult.

Little or no connectivity often exists between the systems, forcing users to manually retrieve data from one system, manually transform the data and then load it into another system leaving no traceability. This lack of robust data quality capabilities creates a multitude of problems. Here are just a few:

- Poor data input with limited validation, affecting trust and confidence in the numbers.

- High number of manual tasks, lowering overall data quality and adding latency to processes.

- No data lineage, preventing drill-down and drill-back capabilities and adding time and manual effort when accessing actionable information for every number.

The solution to disconnected systems may be as simple as transitioning to newer tools and technology. Why? They have more effective integration and built-in validation and control compared to past solutions, which will save time and remove manual tasks.

What’s the Solution?

Some believe Pareto’s law – that 20% of data enables 80% of use cases – applies to data quality. Institutions must therefore take three steps before adopting any project to improve financial data quality:

- Define quality: Determine what quality means to the college or university, agree on the definition and set metrics to achieve the level with which everyone will feel confident.

- Streamline collection of data: Ensure the number of disparate systems is minimized and the integrations use world-class technology with consistency in the data integration processes.

- Identify the importance of data: Know which data is the most critical for the organization and start there – with the 20% – moving on only when the organization is ready.

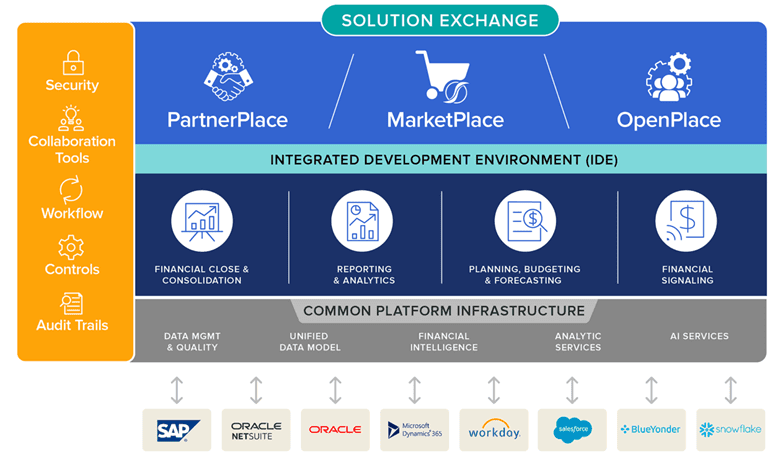

At its core, a fully integrated Finance platform like OneStream with built-in financial data is critical for colleges to drive effective transformation across Finance and different functions and services. What key requirements should be considered? Here are a few key functions:

- 100% visibility from reports to data sources, so all financial and operational data is visible and easily accessible.

- One source of truth for all financial and operational data.

- Guided workflows to protect users from complexity and manual oversights.

Why OneStream for Data Quality Management?

OneStream’s unified platform offers market-leading data integration capabilities with seamless connections to multiple sources, including Finance, HR and student systems. Those capabilities provide unparalleled flexibility and visibility into the data loading and integration process.

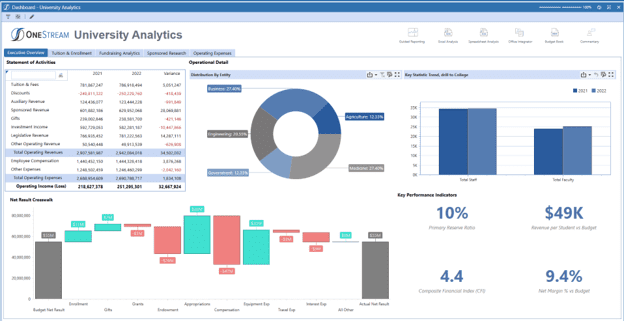

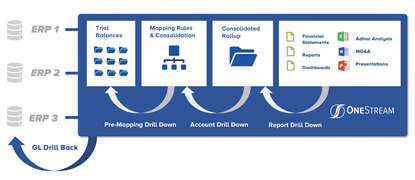

OneStream’s data quality management is a core part of OneStream’s unified platform (see Figure 2). By providing strict controls to deliver confidence and reliability in the data quality, the platform allows organizations to do the following:

- Manage data quality risks using fully auditable integration maps and validations at every stage of the process, from integration to reporting.

- Automate data via direct connections to source databases or via any character-based file format.

- Filter audit reports based on materiality thresholds – ensuring one-time reviews at appropriate points in the process.

- Ability to drill down, drill back and drill through to transactional details for full traceability of every number.

Conclusion

Demands for accuracy, transparency and trust are ever-present in financial and operational reporting, analysis and planning given the detail level needed to guide institutions. Accordingly, universities looking to create a strategy to improve data quality management should consider a CPM software platform with built-in financial data quality to achieve more accurate results and reduce risk.

Learn More

Learn more about how OneStream can produce better data quality and improve accuracy in financial results for higher education.

Learn MoreToday, confidence in data quality is critically important. Demands for accuracy and trust are ever-present in financial and operational reporting, analysis and planning given the detail level needed to guide the business. The growth of artificial intelligence (AI) and machine learning (ML) also demands granular-level quality data. Given those demands, the old “garbage in, garbage out” adage applies more than ever today.

Executives must constantly respond to various – and evolving – changes and thus need trusted, accurate and timely data to make the right decisions regarding any needed actions. Therefore, modern organizations must have a clear strategy for ongoing robust data quality management. Why? Well, better decisions create better outcomes. And high-quality data gives organizations the confidence in data to get decisions right to fuel better performance, more accurate results and reduced risk.

Why Is Data Quality Important?

Data quality matters, as shown by research. According to Ventana, almost nine in 10 organizations (89%) reported data-quality issues as an impactful or very impactful barrier. Those impacts cause a lack of trust in the data and wasted resource time in financial and operational processes.

As organizations turn to more modern technology to help drive better future directions, the emphasis on data quality management in enterprise systems has only increased. In a report on closing the data–value gap, Accenture said that, “without trust in data, organizations can’t build a strong data foundation.” Only one-third of firms, according to the report, trust their data enough to use it effectively to derive value.

In short, the importance of data quality is increasingly clearer. As organizations get more complex and data volumes grow ever larger, attention naturally shifts to the quality of the data being used. Not to mention, as more advanced capabilities are explored and adopted – such as AI and ML – organizations must first examine their data and then take steps to ensure effective data quality. Why are these steps necessary? Simply put, the best results from AI, ML and other advanced technologies are entirely dependent on good, clean quality data right from the start.

What’s Preventing Good Data Quality?

In many cases, legacy CPM and ERP systems were simply not built to work together, making data quality management difficult. Such systems also tended to remain separate as siloed applications, each with its own purpose. Often, little, or no connectivity exists between the systems, forcing users to manually retrieve data from one system, manually transform the data and then load it into another system.

This lack of robust data quality capabilities creates a multitude of problems. Here are just a few:

- Poor data input with limited validation, affecting trust and confidence in the numbers.

- High number of manual tasks, lowering overall data quality and adding latency to processes

- No data lineage, preventing drill-down and drill-back capabilities and adding time and manual effort in accessing actionable information for every number.

The solution may be as simple as demonstrating how much time can be saved by transitioning to newer tools and technology. Via such transitions, reducing the time investment can dramatically improve the quality of the data via effective integration with both validation and control built into the system itself.

What’s the Solution?

Some believe Pareto’s law – that 20% of data enables 80% of use cases – applies to data quality. Organizations must therefore take 3 steps before adopting any project to improve financial data quality:

- Define quality – Determine what quality means to the organization, agree on the definition and set metrics to achieve the level with which everyone will feel confident.

- Streamline collection of data – Ensure the number of disparate systems is minimized and the integrations use world-class technology with consistency in the data integration processes.

- Identify the importance of data – Know which data is the most critical for the organization and start there – with the 20% – moving on only when the organization is ready.

At its core, a fully integrated CPM software platform with built-in financial data quality (see Figure 1) is critical for organizations to drive effective transformation across Finance and Lines of Business. A key requirement is providing 100% visibility from reports to data sources – meaning all financial and operational data must be clearly visible and easily accessible. Key financial processes should be automated. Plus, using a single interface would mean the enterprise can utilize its core financial and operational data with full integration to all ERPs and other systems.

Figure 1: Built-In Financial Data Quality Management in OneStream

The solution should also include guided workflows to protect business users from complexity. How? By uniquely guiding them through all data management, verification, analysis, certification and locking processes.

Why OneStream for Data Quality Management?

OneStream’s unified platform offers market-leading data integration capabilities with seamless connections to multiple sources. Those capabilities provide unparalleled flexibility and visibility into the data loading and integration process.

OneStream’s data quality management is not a module or separate product, but rather a core part of OneStream’s unified platform. The platform provides strict controls to deliver confidence and reliability in the data quality by allowing organizations to do the following:

- Manage data quality risks using fully auditable integration maps and validations at every stage of the process, from integration to reporting.

- Automate data via direct connections to source databases or via any character-based file format.

- Filter audit reports based on materiality thresholds – ensuring one-time reviews at appropriate points in the process.

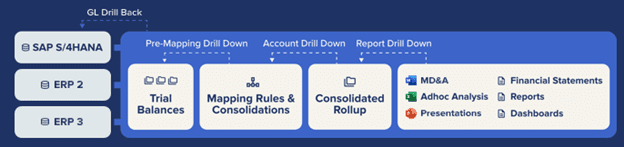

The OneStream Integration Framework and prebuilt connectors offer direct integration with any open GL/ERP or other source system (see Figure 1). That capability provides key benefits:

- Fast, efficient direct-to-source system integration processes.

- Ability to drill down, drill back and drill through to transactional details for full traceability of every number.

- Direct integration with drill back to over 250 ERP, HCM, CRM and other systems (e.g., Oracle, PeopleSoft, JDE, SAP, Infor, Microsoft AX/Dynamics and more).

Delivering 100% Customer Success

Here’s one example of an organization that has streamlined data integration and improved data quality:

“Before OneStream, we had to dig into project data in all the individual ERP systems,” said Joost van Kooten, Project Controller at Huisman. “OneStream helps make our data auditable and extendable. It enables us to understand our business and create standardized processes within a global system. We trust the data in OneStream, so there are no disagreements about accuracy. We can focus on the contract, not fixing the data.”

Like all OneStream customers, Huisman found that the confidence from having “one version of the truth” is entirely possible with OneStream.

Learn More

If your Finance organization is being hindered from unleashing its true value, maybe it’s time to evaluate your internal systems and processes and start identifying areas for improvement. To learn how, read our whitepaper Conquering Complexity in the Financial Close.

Download the White PaperIntroduction

In the bustling world of artificial intelligence (AI) one saying perfectly encapsulates the essence of the work – “garbage in, garbage out.” Why? This mantra underscores a truth often underplayed amid the excitement of emerging technologies and algorithms used across FP&A teams: data quality is a decisive factor for the success or failure of any machine learning (ML) project.

Big data, often referred to as the “new oil,” fuels the sophisticated ML engines that drive decision-making across industries and processes. But just as a real engine cannot run effectively on substandard fuel, ML models trained on poor-quality data will undoubtedly produce inferior results. In other words, for AI in FP&A processes to prove successful, data quality is essential. Read on for more in our first post from our AI for FP&A series.

Understanding the Importance of Data Quality

Data scientists spend 50%-80% of their time collecting, cleaning and preparing data before it can be used to create valuable insights. This time investment is a testament to why “garbage in, garbage out” isn’t a warning but a rule to live by in the realm of AI and ML. Particularly for FP&A, that rule demonstrates why skimping on data quality can lead to inaccurate forecasts, biased results and a loss of trust in ground-breaking AI and ML systems.

Unleashing Data with Sensible ML

In the current digital age, businesses have unprecedented access to vast quantities of data. The data lays a crucial groundwork for making significant business decisions. But to ensure the data available to employees is reliable, visible, secure and scalable, companies must make substantial investments in data management solutions. Why? Substandard data can trigger catastrophic outcomes that could cost millions. If data used to train ML models is incomplete or inaccurate, this could lead to inaccurate predictions with consequential business decisions that lead to loss of revenue. For example, if bad data causes incorrect forecasts about demand, this could lead to the company producing too little or too much of the product, resulting in lost sales, excess inventory costs, or rush shipments and overtime labor.

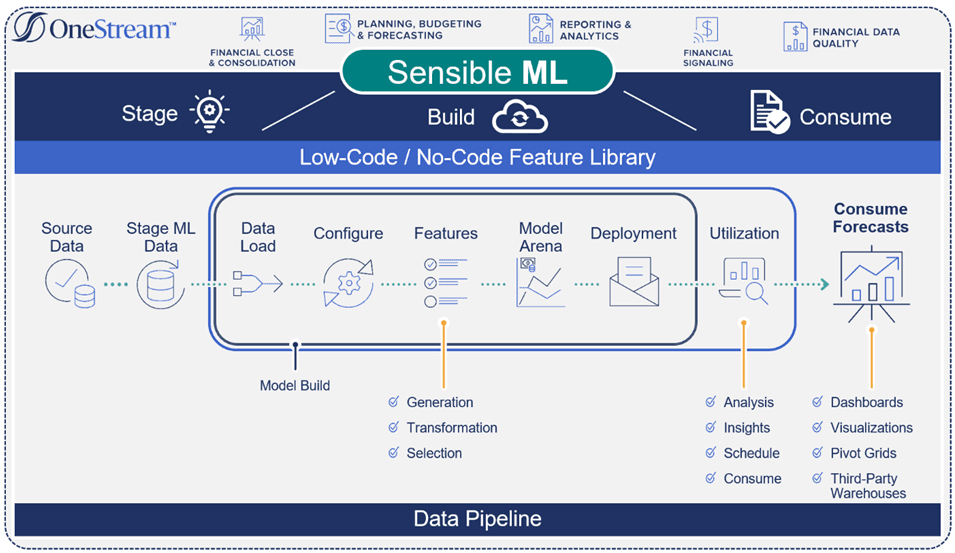

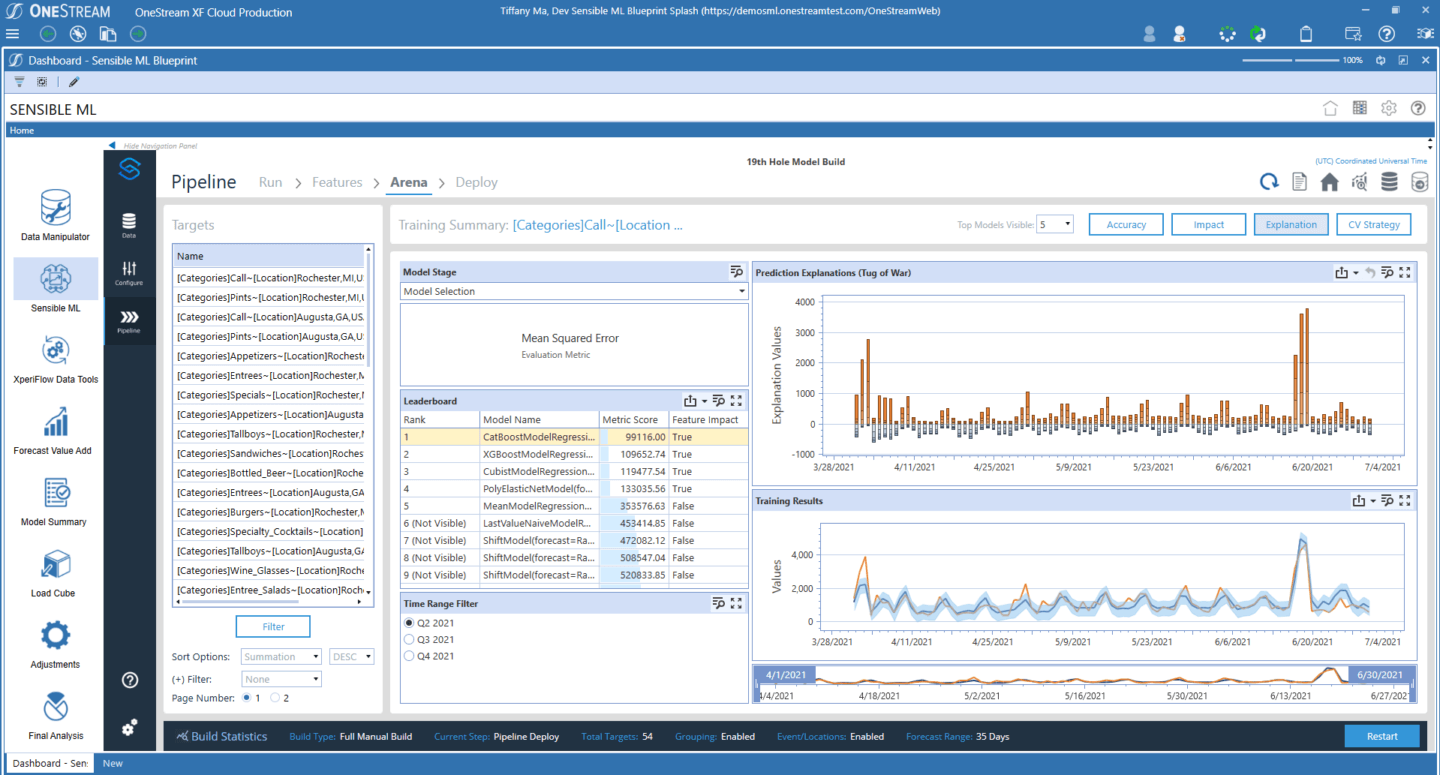

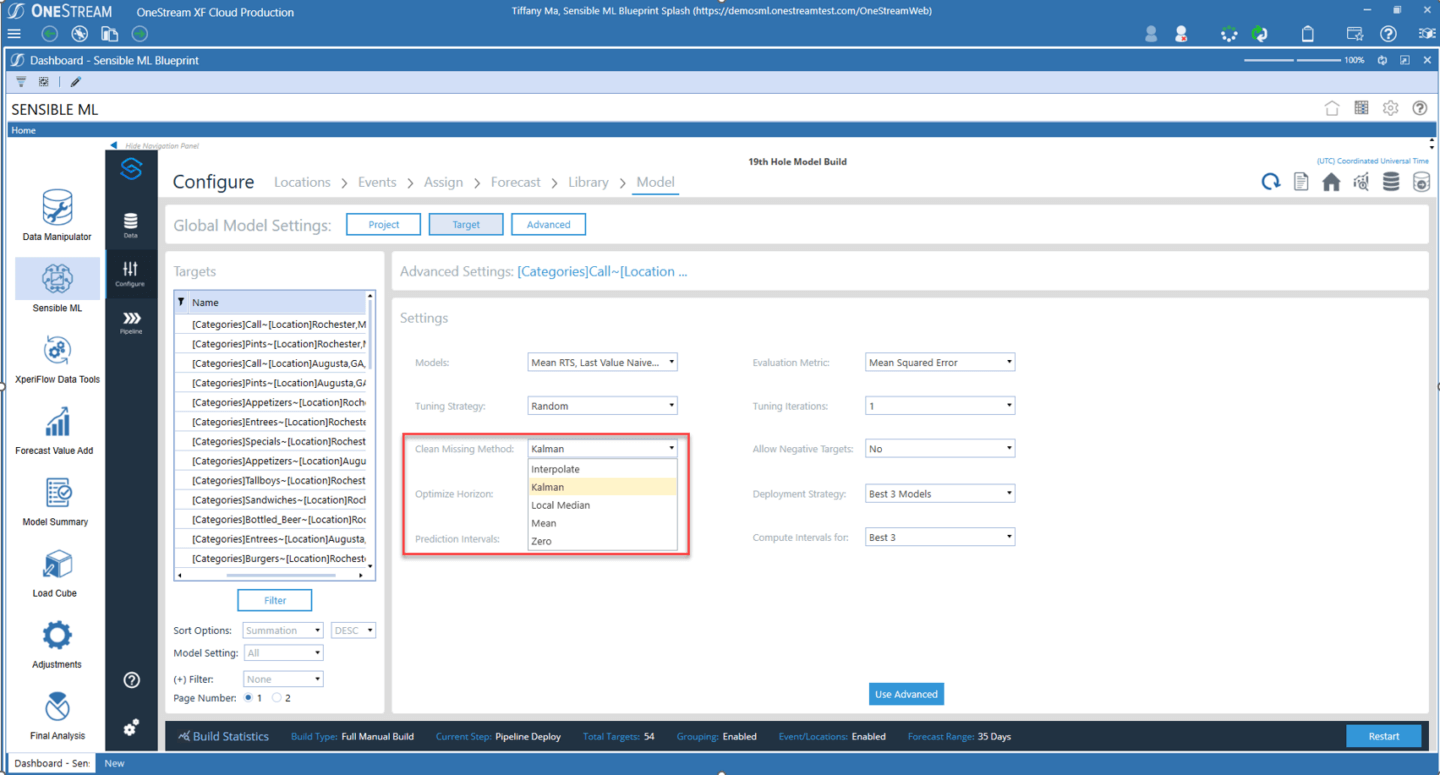

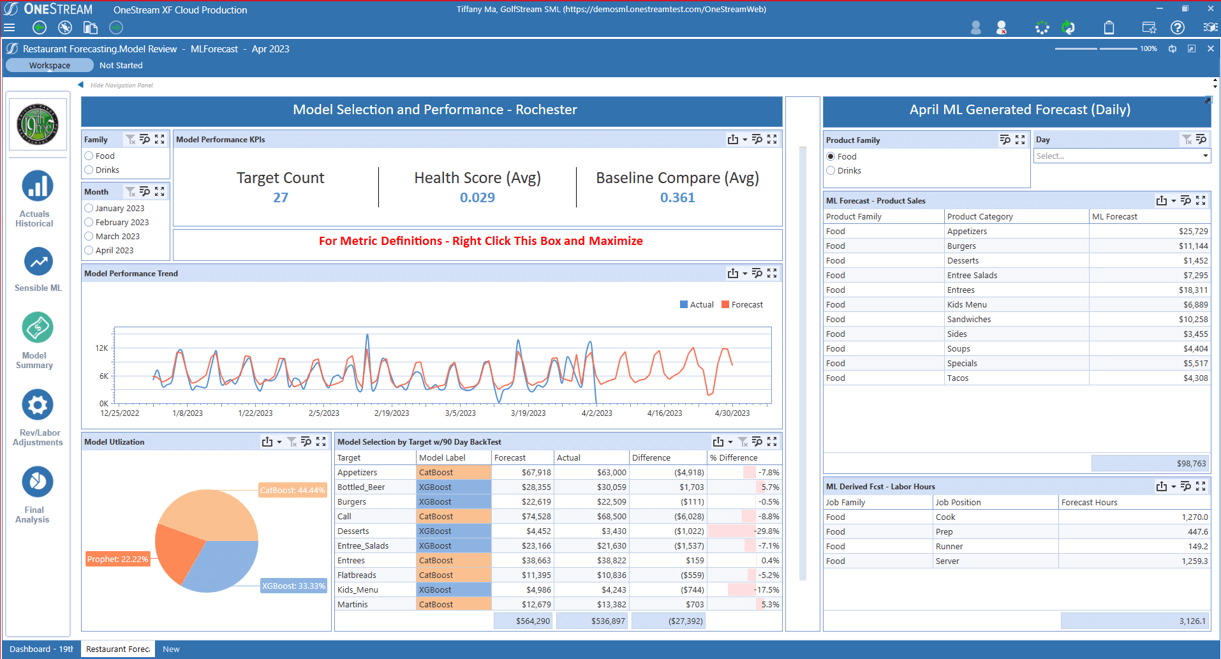

Contrary to “most” predictive analytics forecasting methods, which generate forecasts based on historical results and statistics, OneStream’s Sensible ML incorporates true business insights. These insights include factors such as events, pricing, competitive data and weather – all of which contribute to more accurate and robust forecasting (see Figure 1).

Figure 1: Sensible ML Process Flow

Managing Data End-to-End

FP&A and operational data plays a pivotal role in the success of any machine learning forecasting scenario. However, to create purpose-built data flows that can efficiently scale and provide exceptional user experiences, advanced solutions – such as Sensible ML – are necessary. Sensible ML can expedite and automate crucial decisions throughout the data lifecycle, from the data source to consumption (see Figure 2).

Advanced data flow capabilities encourage the following:

- Trust in the governance of data by ensuring data privacy, compliance with organizational standards and transparent data lineage traceability.

- Enriched internal data with external sources by adding external variables that fuel improvements (e.g., retailers adding external variables to existing data to better profile and recognize customer needs for recommendations, upselling and cross-selling).

- Accelerated data processing by continuously monitoring quality, timeliness and intended context of data.

Figure 2: Sensible ML Pipeline

Sensible ML leverages OneStream’s built-in data management capabilities to ingest source data and business intuition. How? Built-in connectors automatically retrieve external data such as weather, interest rates and other macroeconomic indicators that can be used in the model-building process. While Sensible ML then automatically tests the external data sources without any user intervention, users ultimately decide which data to use.

Using Sensible ML’s Built-in Data Quality

Sensible ML can bring in detailed operational data from any source, including point-of-sale (POS) systems, data warehouses (DW), Enterprise Resource Planning (ERP) systems and multi-dimensional cube data.

The data quality capabilities in Sensible ML provide some of the most robust capabilities available in the FP&A market. Those capabilities include pre-and post-data loading validations and confirmations, full audit, and full flexibility in data manipulation. In addition, Sensible ML’s data management monitors the way data curation typically behaves and then sends alerts when anomalies occur (see figure 3). Here are a few examples:

- Data Freshness – Did the data arrive when it should have?

- Data Volume – Are there too many or too few rows?

- Data Schema – Did the data organization change?

Figure 3: Sensible ML’s Auto ML data cleansing

In Sensible ML, an intuitive interface offers drop downs with the most performant and effective data cleansing methods, allowing business users of any skillset to run the entire data pipeline from start to finish.

Conclusion

Ensuring data quality is not just a box to be checked in the machine learning journey. Rather, data quality is the foundation upon which the entire edifice stands. As the boundaries of what’s possible with ML are continually pushed, the age-old “garbage in, garbage out” adage will still apply. Businesses must thus strive to give data quality the attention it rightfully deserves. After all, the future of machine learning is not just about more complex algorithms or faster computation. Producing accurate, fair and reliable models – ones built based on high-quality data – are also essential to effective ML.

Learn More

To learn more about the end-to-end flow of data and how FP&A interacts with data, stay tuned for additional posts from our Sensible ML blog series. You can also download our white paper here.

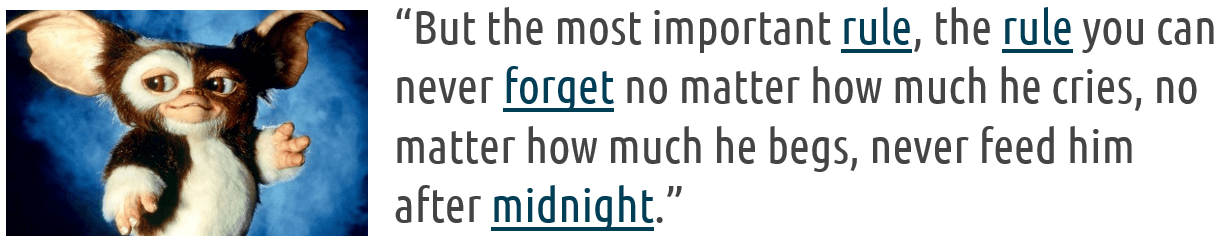

Organizations everywhere rely on data to make informed decisions and improve the bottom line through data management. But with the vast amount of data today, things can quickly get out of control and spawn data gremlins (i.e., little pockets of disconnected, ungoverned data) that wreak havoc on the organization.

Remember those adorable creatures that transformed into destructive, mischievous creatures when fed after midnight in the 1984 comedy horror film Gremlins? (see Figure 1)

In the same way, data gremlins – aka “technical debt” – can arise if your systems are not flexible to enable finance to deliver. Effectively managing data to prevent data gremlins from wreaking havoc is crucial in any modern organization – and that requires first understanding the rise of data gremlins.

Do You Have Data Gremlins?

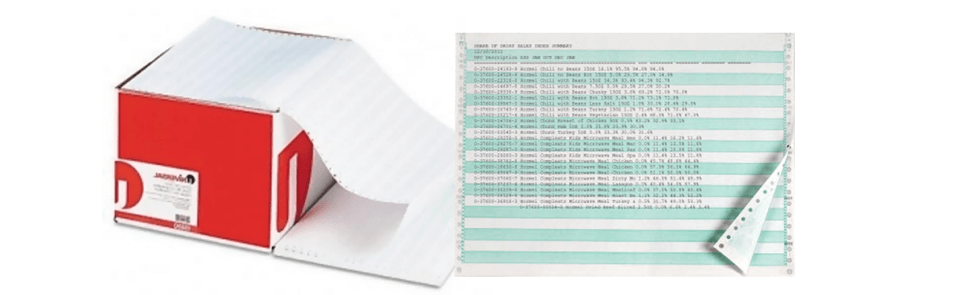

How do data gremlins arise, and how do they proliferate so rapidly? The early beginnings started with Excel. People in Finance and operations would get big stacks of green bar reports (see Figure 2). Don’t remember those? They looked like the image below and were filed and stacked in big rooms.

When you needed data, you pulled the report, re-typed the data into Excel, added some formatting and calculations, printed the spreadsheet and dropped it in your boss’s inbox. Your boss would then review and make suggestions and additions till confident (a relative term here!) that sharing the spreadsheet with upper management would be useful, and voila, a gremlin is born.

And gremlins are bad for the organization.

The Rise of Data Gremlins

Data gremlins are not a new phenomenon but one that can severely impact the organization, leading to wasted time and resources, lost revenue, and a damaged reputation. For that reason, having a robust data management strategy is essential to prevent data gremlins from causing havoc.

As systems and integration got more sophisticated and general ledgers became a reliable book of record, data gremlins should have faded out of existence. But did they? Nope, not even a little. In fact, data gremlins grew faster than ever partly due to the rise of the most popular button on any report, anywhere at the time – you guessed it – the “Export to .CSV” button. Creating new gremlins became even easier and faster, and management started habitually asking for increasingly more analysis that could easily be created in spreadsheets (see Figure 3). To match the demand in 2006, Microsoft increased the number of rows of data a single sheet could have to a million rows. A million! And people cheered!

However, errors were buried in those million-row spreadsheets, not in just some spreadsheets, but in almost ALL of them. The spreadsheets had no overarching governance and could not be automatically checked in any way. As a result, those errors would live for months and years. Any consultant who experienced those spreadsheets will confirm stories of people adding “+1,000,000” to a formula as a last-minute adjustment and then forgetting to remove the addition later. Major companies reported incorrect numbers to the street, and people lost jobs over such errors.

As time passed, the tools got more sophisticated – from MS Excel and MS Access to departmental planning solutions such as Anaplan, Essbase, Vena Solutions, Workday Adaptive and others. Yet none stand up to the level of reliability IT is tasked with achieving. The controls and audits are nothing compared to what enterprise resource planning (ERP) solutions provide. So why do such tools continue to proliferate? Who feeds them after midnight, so to speak? The real reason is that these departmental planning systems are like a hammer. Every time a new model or need for analysis crops up, it’s “Let’s build another cube.” Even if that particular data structure is not the best answer, Finance has a hammer, and they are going to pound something with it. And why should they do their diligence to understand the proliferation of Gremlins? (see Figure 4)

The relationship can best be described as “complicated.” ERPs and data warehouses are secure, managed environments but offer almost no flexibility for Finance or Operations to do any sophisticated reporting or analysis that has not been created for them by IT. Requests for new reports are made, often through a ticket system, and the better IT is at satisfying these requests, the longer the ticket queue becomes. Suddenly the team is doing nothing but reporting, and that leaves Finance thinking, “How the heck is there a team of people in IT not focused on making the business more efficient?”

The answer suggested by the mega ERP vendors is to stop doing that. End users don’t really need that data, that level of granularity or that flexibility. They need to learn to simplify and not worry about trivial things. For example, end users don’t need visibility into what happens in a legal consolidation or a sophisticated forecast. “Just trust us. We will do it,” ERP vendors say.

Here’s the problem: Finance is tasked with delivering the right data at the right time with the right analysis. What is the result of just “trusting” ERP vendors? Even more data gremlins. More manual reconciliations. Less security and control over the most critical data for the company. In other words, an organization can easily spend $30M on a secure, well-designed ERP system that doesn’t fix the gremlin problem – emphasizing why organizations must control the data management process.

The Importance of Controlling Data Management and Reducing Technical Debt

Effective data management enables businesses to make informed decisions based on accurate and reliable data. But controlling data management is what prevents data gremlins and reduces technical debt. Data quality issues are perpetuated by the gremlins and can arise when proper data management practices aren’t in place. These issues can include incomplete, inconsistent or inaccurate data, leading to incorrect conclusions, poor decision-making and wasted resources.

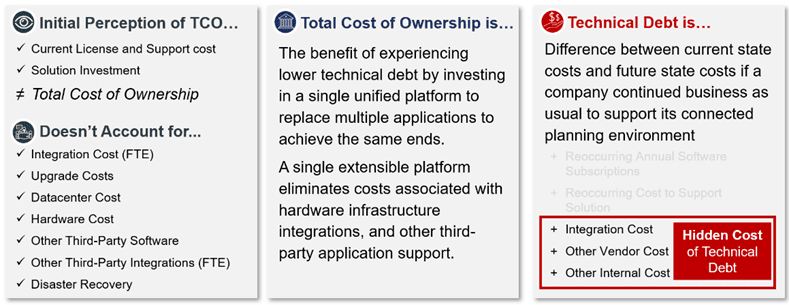

One of the biggest oversights when dealing with data gremlins is only focusing on Return on Investment (ROI) and dismissing fully burdened technical debt (see Figure 5). Many finance teams use performance measurements like Total Cost of Ownership (TCO) and ROI to qualify that the solution is good for the organization, but rarely does the organization dive deeper – beyond the performance measurements to include opportunities to reduce implementation and maintenance waste. Unfortunately, this view of performance measurements does not account for hidden complexities and costs associated with the negative impacts of data gremlin growth.

Technical Debt is MORE than the Total Cost of Ownership

Unfortunately, data gremlins can occur at any stage of the data management process, from data collection to analysis and reporting. These gremlins can be caused by various factors, such as human error, system glitches or even malicious activity. For example, a data gremlin could be a missing or incorrect field in a database, resulting in inaccurate calculations or reporting.

Want proof? Just think about the long, slow process you and your team engage in when tracking down information between fragmented sources and tools rather than analyzing results and helping your business partners act. Does this drawn-out process sound familiar?

The good news is that another option exists. Unifying these multiple processes and tools can provide more automation, remove the complexities of the past and meet the diverse requirements of even the most complex organization, both today and well into the future. The key is building a flexible yet governed environment that allows problems and new analyses to be created within the framework – no Gremlins.

Sunlight on the Data Gremlins

At OneStream, we’ve lived and managed this complicated relationship our entire careers. We even made gremlins back when the answer for everything was “build another spreadsheet.” But we’ve eliminated the gremlins in spreadsheets only to replace them with departmental apps or cubes that are just bigger, nastier gremlins. Our battle scars have taught us that gremlins, while easy to use and manage, are not the answer. Instead, they proliferate and cause newer and bigger hard-to-solve problems of endless data reconciliation.

For that reason, OneStream was designed and built from the ground up to eliminate gremlins, including the need for them in the future (see Figure 6). OneStream combines all the security, governance and audit needed to ensure accurate data – and does it all in one place without the need to walk off-prem while allowing the flexibility to be leveraged inside the centrally defined framework. In OneStream, organizations can leverage Extensible Dimensionality to provide value to end users to “do their thing” without having to push the “Export to .CSV” button.

OneStream is also a platform in the truest sense of the word. The platform provides direct data integration to source data, drill back to that data, and flexible, easy-to-use reporting and dashboarding tools.

That allows IT to eliminate the non-value add cost of authoring reports AND the gremlins – all in one fell swoop.

Finally, our platform is the only EPM platform allowing organizations to develop their functionality directly on the platform. That’s correct – OneStream is a full development platform where organizations can leverage all the platform resources of integration and reporting needed for organizations to deliver their own Intellectual Property (IP). They can even encrypt the IP in the platform or share it with others.

OneStream, in other words, allows organizations to manage their data effectively. In our e-book on financial data quality management, we shared the top 3 goals for effective financial data quality management with CPM:

- Simplified data integration – Direct integration to any open GL/ERP system empowers users to drill back and drill through to source data.

- Improved data integrity – Powerful pre-data and post-data loading validations and confirmations that ensure the right data is available at every step in the process.

- Increased transparency – 100% transparency and audit trails for data, metadata and process change visibility from report to the source.

Conclusion

Data gremlins can disrupt business operations and lead to severe business implications, making it essential for organizations to control their data management processes before data gremlins emerge. By developing a data management strategy, investing in robust data management systems, conducting regular data audits, training employees on data management best practices and having a disaster recovery plan, organizations can prevent data-related issues and ensure business continuity.

Learn More

To learn more about how organizations are moving on from their data gremlins, download our whitepaper titled “Unify Connected Planning or Face the Hidden Cost.”

Machine learning (ML) has the potential to revolutionize Enterprise Performance Management (EPM) by providing organizations with real-time insights and predictive capabilities across planning and forecasting processes. With the ability to process vast amounts of data, ML algorithms can help organizations identify patterns, trends and relationships that would otherwise go unnoticed. And as the technology continues to evolve and improve, even greater benefits are likely to emerge in the future as Finance leverages the power of ML to achieve financial goals.

Join us as we examine Sensible ML for EPM – Future of Finance at Your Fingertips.

The Shift to Intelligent Finance

For CFOs, whether artificial intelligence (AI) and ML will play a role across enterprise planning processes is no longer a question. Today, the question instead focuses on how to operationalize ML in ways that return optimal results and scale. The answer is where things get tricky.

Why? Business agility is critical in the rapidly changing world of planning. To think fast and move first, organizations must overcome challenges spanning the need to rapidly grow the business, accurately predict future demand, anticipate unforeseen market circumstances and more. Yet the increasing volumes of data across the organization make it difficult for decision-makers to zero in on the necessary data and extrapolate the proper insights to positively impact planning cycles and outcomes. Further exacerbating the problem, many advanced analytics processes and tools only leverage high-level historical data, forcing decision-makers to re-forecast from scratch whenever unforeseeable market shifts hit.

But with AI and ML, business analysts can analyze and correlate the most relevant internal/external variables. And the variables then contribute to forecasting accuracy and performance across the Sales, Supply Chain, HR and Marketing processes that comprise financial plans and results.

Those dynamics underscore why now is the time for Intelligent Finance.

Over the coming weeks, we’ll share a four-part blog series discussing the path toward ML-powered intelligent planning. Here’s a sneak peek at the key topics in our Sensible ML for EPM series:

- Part 1: 4 Driving Forces for Change. These forces have the potential to accelerate ML and move organizations away from descriptive and diagnostic analytics (explaining what happened and why) and toward predictive and prescriptive analytics

- Part 2: Increase Accuracy and Transparency. Today more than ever, organizations are looking to become more agile, accurate and transparent with their financial plans to stay competitive. And Sensible ML can help. It allows input from users closer to the business to infuse business intuition into the model, which can increase accuracy and ensure all the available information is considered. (see figure 1)

- Part 3: Key Capabilities for Intelligent Planning. Sensible ML is unlike “most” predictive analytics forecasting capabilities (looking at prior results and statistics and generating forecasts based on what happened in the past). Specifically, Sensible MLhas the capability to not only look at prior results but also then take on additional business intuition – such as events, pricing, competitive information and weather – to help drive more precise/robust forecasting.

- Part 4: Customer Success Stories. Every customer must be a reference and a success. OneStream’s mission statement drives everything we do. Taking a deeper look into customers who have reaped the benefits of leveraging Sensible ML can help any organization gain true predictive insights.

Regardless of where you are in your Finance journey, our Sensible ML for EPM series is designed to share insights from the experience of OneStream’s team of industry experts. We recognize, of course, that every organization is unique – so please assess what’s most important to you based on the specific needs of your organization.

Conclusion

The aspiration of ML-powered plans is nothing new. But to remain competitive amid the increasing pace of change and technology disruption, Finance leaders must think differently to finally conquer the complexities inherent in traditional enterprise planning. ML has the potential to greatly improve EPM by providing organizations with real-time insights and predictive analytics. However, organizations must overcome challenges (e.g., ensuring good data quality and selecting the right ML algorithm) to achieve success. As ML continues to evolve, increasingly more organizations are likely to leverage its power to drive better financial and operational outcomes.

Several challenges lie ahead for organizations of all sizes, but one of the most important decisions will be implementing the right ML solution – one that can effectively align all aspects of planning and elevate the organization toward its strategic goals. Sensible ML answers that call. It brings power and sophistication to organizations to drive transparency and increase the velocity of forecasting processes with unprecedented transparency and alignment to business performance.

Learn More

To learn more about the value of Sensible ML, download our whitepaper titled “Sensible Machine Learning for CPM – Future Finance at Your Fingertips” by clicking here. And don’t forget to tune in for additional posts from our machine learning blog series!

Today, confidence in data quality is needed more than ever. Executives must constantly respond to a multitude of changes and be provided with accurate and timely data to make the right decisions on what actions to take. It’s, therefore, more critical than ever that organisations have a clear strategy for ongoing financial data quality management (FDQM). Why? Well, better outcomes result from better decisions. And high-quality data gives organisations the confidence needed to get it right. This confidence in data then ultimately drives better performance and reduces risk.

Why Is Data Quality Important?

Data quality matters for several key reasons, as shown by research. According to Gartner,[1] poor data quality costs organisations an average of $12.9 million annually. Those costs accumulate not only from the wasted resource time in financial and operational processes but also – and even more importantly – from missed revenue opportunities.

As organisations turn to better technology to help drive better future direction, the emphasis placed on financial data quality management in enterprise systems has only increased. Gartner also predicted that, by 2022, 70% of organisations would rigorously track data quality levels via metrics, improving quality by 60% to significantly reduce operational risks and costs.

In a report on closing the data–value gap, Accenture[2] said that “without trust in data, organisations can’t build a strong data foundation”. Only one-third of firms, according to the report, trust their data enough to use it effectively and derive value from it.

In short, the importance of data quality is becoming increasingly clearer to many organisations – creating a compelling case for a change in financial reporting software evaluations. There’s now real momentum to break down the historic barriers and move forward with renewed confidence.

Breaking Down the Barriers to Data Quality

Financial data quality management has been difficult, if not virtually impossible to achieve for many organisations. Why? Well, in many cases, legacy corporate performance management (CPM) and ERP systems were simply not built to work together naturally and instead tended to remain separate as siloed applications, each with their own purpose. Little or no connectivity exists between the systems, so users are often forced to resort to manually retrieving data from one system, manually transforming the data, and then loading it into another system – which requires significant time and risks lower quality data.

There’s also often a lack of front-office controls that leads to a host of issues:

- Poor data input and limited validation.

- Inefficient data architecture with multiple legacy IT systems.

- Lack of business support for the value of data transformation.

- Inadequate executive-level attention prevents an organisation from laying the effective building blocks to financial data quality.

All of that contributes to significant barriers to ensuring good data quality. Here are the top 3 barriers:

- Multiple disconnected systems – Multiple systems and siloed applications make it difficult to merge, aggregate, and standardise data in a timely manner. Individual systems are added at different times, often using different technology and different dimensional structures.

- Poor quality data – Data in source systems is often incomplete, inconsistent, or out of date. Plus, many integration methods simply copy data complete with any existing errors – lacking any validations or governance.

- Executive buy-in – Failing to get executive buy-in could be due to perception or approach. After all, an effective data quality strategy requires focus and investment. With so many competing projects in any organisation, the case for a strategy must be compelling and effectively demonstrate the value that data quality can deliver.

Yet even when such barriers are acknowledged, getting the Finance and Operations teams to give up their financial reporting software tools and traditional ways of working can be extremely challenging. The barriers, however, are often not as difficult to surmount as some believe.

The solution may be as simple as demonstrating how much time can be saved by transitioning to newer tools and technology. Via such transitions, reducing the time investment can result in a dramatic improvement in the quality of the data through effective integration with both validation and control built into the system itself.

The Solution

There are some who believe Pareto’s law applies to data quality: Typically, 20% of data enables 80% of use cases. It is therefore critical that an organisation follows these 3 steps before embarking on any project to improve financial data quality:

- Define quality – Determine what quality means to the organisation, agree on the definition, and set metrics to achieve the level with which everyone will feel confident.

- Streamline collection of data – Ensure the number of disparate systems is minimised and the integrations use world-class technology with consistency to the data collection processes.

- Identify the importance of data – Know which data is the most critical for the organisation and start there – with the 20%. Then move on when the organisation is ready.

At its core, a fully integrated CPM software platform with built-in financial data quality (see Figure 1) is critical for organisations to drive effective transformation across Finance and Lines of Business. A key requirement is providing 100% visibility from reports to data sources – meaning all financial and operational data must be clearly visible and easily accessible. Key financial processes should be automated and using a single interface would mean the enterprise can utilise its core financial and operational data with full integration to all ERPs and other systems.

The solution should also include guided workflows to protect business users from complexity by guiding them uniquely through all data management, verification, analysis, certification, and locking processes.

OneStream offers all of that and more. With a strong foundation in financial data quality, OneStream allows organisations to integrate and validate data from multiple sources and make confident decisions based on accurate financial and operating results. OneStream’s financial data quality management is not a module or separate product but built into the core of the OneStream platform — providing strict controls to deliver the confidence and reliability needed to ensure quality data.

In our e-book on Financial Data Quality Management, we shared the following 3 goals for effective financial data quality management with CPM:

- Simplify data integration – Direct integration to any open GL/ERP system and empower users to drill-back and drill-through to source data.

- Improve data integrity – Powerful pre-data and post-data loading validations and confirmations ensure the right data is available at every step in the process.

- Increase transparency – 100% transparency and audit trails for data, metadata, and process change visibility from report to source.

Delivering 100% Customer Success

Here’s one example of an organization that has streamlined data collection and improved data quality in the financial close, consolidation, and reporting process leveraging OneStream’s unified platform.

MEC Holding GmbH – headquartered in Bad Soden, Germany – manufactures and supplies industrial welding consumables and services, cutting systems, and medical instruments for OEMs in Germany and internationally. The company operates through three units: Castolin Eutectic Systems, Messer Cutting Systems, and BIT Analytical Instruments.

MEC has 36 countries reporting monthly, including over 70 entities and 15 local currencies, so the financial consolidation and reporting process includes a high volume of intercompany activity. With OneStream, data collection is now much easier with Guided Workflows leading users through their tasks. Users upload trial balances on their own vs. sending to corporate, which speeds the process and ensures data quality. Plus, the new system was very easy for users to learn and adopt with limited training.

MEC found that the confidence from having ‘one version of the truth’ is entirely possible with OneStream.

Learn More

If your Finance organisation is being hindered from unleashing its true value, maybe it’s time to evaluate your internal systems and processes and start identifying areas for improvement. To learn how read our whitepaper on Conquering Complexity in the Financial Close.

Financial data quality management (FDQM) has often been developed as merely an afterthought or presented as simply an option by most corporate performance management (CPM) vendors. In reality, it should be the foundation of any CPM system. Why? Well, having robust FDQM capabilities reduces not only errors and their consequences, but also downtime caused by breaks in processes and the associated costs of inefficiency.

Having effective FDQM means providing strict audit controls alongside standard, defined, and repeatable processes for maximum confidence and reliability in any business user process. Effective FDQM also enables an organisation to shorten financial close and budgeting cycles and get critical information to end-users faster and more easily.

Continuing our Re-Imagining the Close Blog series here, we examine why financial data quality management is an essential requirement in today’s corporate reporting environment. Poor data quality can lead to errors or omissions in financial statements, which often result in compliance penalties, loss of confidence from stakeholders, and potentially a reduction in market value.

Increasing Interest in Financial Data Quality Management

Organisations are now realising that FDQM is critical. Why? Finance teams must ensure not only that the financial reporting gets out of the door accurately but also that all forward-looking data and guidance is fully supported by ‘quality’ actual results.

An old expression used around financial reporting captures this idea well – ‘if you put garbage in, you get garbage out.

A lack of robust data integration capabilities creates a multitude of problems. Here are just a few:

- Manual steps that waste resources and time

- Risk of errors

- Poor financial decisions

- Lack of traceability

- Lack of auditability

In many cases, legacy CPM and ERP systems were simply not built to work together naturally and tend to remain separate as siloed applications, each with their own purpose. Often, little or no connectivity exists between the systems, and users are forced to resort to manually retrieving data from one system, manually transforming the data, and then loading it into another system.

As organisations get increasingly more complex and data volumes grow ever larger, it is only natural to turn attention to the quality of the data being input. Not to mention, as more advanced capabilities are explored and adopted – such as artificial intelligence (AI) and machine learning (ML) – organisations are being forced to first examine their data and then take steps to ensure effective data quality. Why are these steps necessary? Simply put, the best results from AI, ML, and other advanced technologies are entirely dependent on good, clean quality data right from the start.

What’s the Solution?

A fully integrated CPM/EPM platform with FDQM at its core is critical for organisations to drive effective transformation across Finance and lines of business. A key requirement is 100% visibility from reports to sources – all financial and operational data must be clearly visible and easily accessible. Key financial processes should be automated and using a single interface would mean the enterprise can utilise its core financial and operational data with full integration to all ERPs and other systems.

The solution must also include guided workflows to protect business users from complexity by guiding them uniquely through all data management, verification, analysis, certification and locking processes.

Users should be able to achieve effective FDQM and verification through standardised and simplified data collection and analysis with reports at every step in the workflow. The workflows must be guided to provide standard, defined, and repeatable processes for maximum confidence and reliability in a business user-driven process. What’s the end result? The simplification of business processes and a reduction in errors and inefficiencies across the enterprise.

Why OneStream?

OneStream’s strong foundations in the FDQM arena allow for unparalleled flexibility and visibility into the data loading and integration process.

OneStream’s Financial Data Quality Management is not a module or separate product but is a core part of OneStream’s unified platform – providing strict controls to deliver confidence and reliability in the quality of your data. How? Financial data quality risk is managed using fully auditable system integration maps, and validations are used to control submissions from remote sites. Data can be loaded via direct connections to source databases or via any file format. Audit reports can be filtered based on materiality thresholds – ensuring one-time reviews at appropriate points in the process.

In essence, OneStream’s unified platform offers market-leading data integration capabilities with seamless connections to multiple sources.

OneStream Integration Connectors offer direct integration with any open GL/ERP or other source systems, providing the following benefits:

- Delivers fast and efficient direct-to-source system integration processes with built-in robotic process automation (RPA).

- Drills down, drills back, and drills through to transactional details, including journal entries.

- Provides direct integration with drill back to over 250 ERP, HCM, CRM, and other systems (e.g., Oracle, PeopleSoft, JDE, SAP, Infor, Microsoft AX/Dynamics, and more).

100% Customer Success

AAA Life Insurance implemented OneStream to support all their financial consolidation, reporting, budgeting, and analysis needs. Ever since AAA Life has streamlined the financial close process and dramatically improved not only visibility into data but also transparency into results. They can now drill down from OneStream’s calculated or consolidated numbers all the way back to the transactional ERP system to get rapid answers to critical questions.

The dream of ‘one version of the truth’ is entirely possible with OneStream.

Learn More

To learn more about how you can re-imagine the financial close with the unrivalled power of OneStream’s Intelligent Finance Platform, download our whitepaper. And don’t forget to tune in for additional posts from our Re-Imagining the Financial Close blog series.

Today’s CFOs and controllers need to manage their critical, enterprise-wide financial data and processes as effectively as possible. That data needs to be timely, accurate and easily accessible for insightful reporting and analysis to maintain a competitive edge. Accordingly, their corporate performance management (CPM) solutions need to be robust, scalable and provide full integration with their ERP, HCM, CRM and other systems.

Whether you’re shopping around for an EPM solution or looking to switch over to a new solution — OneStream’s drill-through capability is the functionality you never knew you needed.

Many vendors claim to handle complex consolidations, but there are several significant differences between the underlying engine that is needed to run financial data aggregation and the engine used to run straight aggregation or simple consolidations. Any vendor that uses Microsoft Analysis Services, Oracle Essbase, Cognos, TM1, SAP Hanna or a similar straight aggregation engine will face numerous challenges to address core financial consolidation requirements. Let’s examine this more closely.