Today, confidence in data quality is needed more than ever. Executives must constantly respond to a multitude of changes and be provided with accurate and timely data to make the right decisions on what actions to take. It’s, therefore, more critical than ever that organisations have a clear strategy for ongoing financial data quality management (FDQM). Why? Well, better outcomes result from better decisions. And high-quality data gives organisations the confidence needed to get it right. This confidence in data then ultimately drives better performance and reduces risk.

Why Is Data Quality Important?

Data quality matters for several key reasons, as shown by research. According to Gartner,[1] poor data quality costs organisations an average of $12.9 million annually. Those costs accumulate not only from the wasted resource time in financial and operational processes but also – and even more importantly – from missed revenue opportunities.

As organisations turn to better technology to help drive better future direction, the emphasis placed on financial data quality management in enterprise systems has only increased. Gartner also predicted that, by 2022, 70% of organisations would rigorously track data quality levels via metrics, improving quality by 60% to significantly reduce operational risks and costs.

In a report on closing the data–value gap, Accenture[2] said that “without trust in data, organisations can’t build a strong data foundation”. Only one-third of firms, according to the report, trust their data enough to use it effectively and derive value from it.

In short, the importance of data quality is becoming increasingly clearer to many organisations – creating a compelling case for a change in financial reporting software evaluations. There’s now real momentum to break down the historic barriers and move forward with renewed confidence.

Breaking Down the Barriers to Data Quality

Financial data quality management has been difficult, if not virtually impossible to achieve for many organisations. Why? Well, in many cases, legacy corporate performance management (CPM) and ERP systems were simply not built to work together naturally and instead tended to remain separate as siloed applications, each with their own purpose. Little or no connectivity exists between the systems, so users are often forced to resort to manually retrieving data from one system, manually transforming the data, and then loading it into another system – which requires significant time and risks lower quality data.

There’s also often a lack of front-office controls that leads to a host of issues:

- Poor data input and limited validation.

- Inefficient data architecture with multiple legacy IT systems.

- Lack of business support for the value of data transformation.

- Inadequate executive-level attention prevents an organisation from laying the effective building blocks to financial data quality.

All of that contributes to significant barriers to ensuring good data quality. Here are the top 3 barriers:

- Multiple disconnected systems – Multiple systems and siloed applications make it difficult to merge, aggregate, and standardise data in a timely manner. Individual systems are added at different times, often using different technology and different dimensional structures.

- Poor quality data – Data in source systems is often incomplete, inconsistent, or out of date. Plus, many integration methods simply copy data complete with any existing errors – lacking any validations or governance.

- Executive buy-in – Failing to get executive buy-in could be due to perception or approach. After all, an effective data quality strategy requires focus and investment. With so many competing projects in any organisation, the case for a strategy must be compelling and effectively demonstrate the value that data quality can deliver.

Yet even when such barriers are acknowledged, getting the Finance and Operations teams to give up their financial reporting software tools and traditional ways of working can be extremely challenging. The barriers, however, are often not as difficult to surmount as some believe.

The solution may be as simple as demonstrating how much time can be saved by transitioning to newer tools and technology. Via such transitions, reducing the time investment can result in a dramatic improvement in the quality of the data through effective integration with both validation and control built into the system itself.

The Solution

There are some who believe Pareto’s law applies to data quality: Typically, 20% of data enables 80% of use cases. It is therefore critical that an organisation follows these 3 steps before embarking on any project to improve financial data quality:

- Define quality – Determine what quality means to the organisation, agree on the definition, and set metrics to achieve the level with which everyone will feel confident.

- Streamline collection of data – Ensure the number of disparate systems is minimised and the integrations use world-class technology with consistency to the data collection processes.

- Identify the importance of data – Know which data is the most critical for the organisation and start there – with the 20%. Then move on when the organisation is ready.

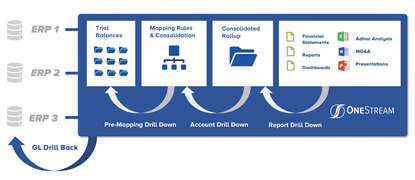

At its core, a fully integrated CPM software platform with built-in financial data quality (see Figure 1) is critical for organisations to drive effective transformation across Finance and Lines of Business. A key requirement is providing 100% visibility from reports to data sources – meaning all financial and operational data must be clearly visible and easily accessible. Key financial processes should be automated and using a single interface would mean the enterprise can utilise its core financial and operational data with full integration to all ERPs and other systems.

The solution should also include guided workflows to protect business users from complexity by guiding them uniquely through all data management, verification, analysis, certification, and locking processes.

OneStream offers all of that and more. With a strong foundation in financial data quality, OneStream allows organisations to integrate and validate data from multiple sources and make confident decisions based on accurate financial and operating results. OneStream’s financial data quality management is not a module or separate product but built into the core of the OneStream platform — providing strict controls to deliver the confidence and reliability needed to ensure quality data.

In our e-book on Financial Data Quality Management, we shared the following 3 goals for effective financial data quality management with CPM:

- Simplify data integration – Direct integration to any open GL/ERP system and empower users to drill-back and drill-through to source data.

- Improve data integrity – Powerful pre-data and post-data loading validations and confirmations ensure the right data is available at every step in the process.

- Increase transparency – 100% transparency and audit trails for data, metadata, and process change visibility from report to source.

Delivering 100% Customer Success

Here’s one example of an organization that has streamlined data collection and improved data quality in the financial close, consolidation, and reporting process leveraging OneStream’s unified platform.

MEC Holding GmbH – headquartered in Bad Soden, Germany – manufactures and supplies industrial welding consumables and services, cutting systems, and medical instruments for OEMs in Germany and internationally. The company operates through three units: Castolin Eutectic Systems, Messer Cutting Systems, and BIT Analytical Instruments.

MEC has 36 countries reporting monthly, including over 70 entities and 15 local currencies, so the financial consolidation and reporting process includes a high volume of intercompany activity. With OneStream, data collection is now much easier with Guided Workflows leading users through their tasks. Users upload trial balances on their own vs. sending to corporate, which speeds the process and ensures data quality. Plus, the new system was very easy for users to learn and adopt with limited training.

MEC found that the confidence from having ‘one version of the truth’ is entirely possible with OneStream.

Learn More

If your Finance organisation is being hindered from unleashing its true value, maybe it’s time to evaluate your internal systems and processes and start identifying areas for improvement. To learn how read our whitepaper on Conquering Complexity in the Financial Close.

Get Started With a Personal Demo